Projects

The Projects at Cogrob

Agile - Autonomy Stack

This project focuses on developing a modular autonomy stack for on-campus vehicle navigation, spanning perception, planning, and control. The system is designed to be lightweight, flexible, and easy to iterate on, enabling rapid experimentation across different approaches and sensor configurations. The primary goal is to quickly evaluate what works in real-world campus environments, identify failure modes, and continuously improve system performance through fast iteration and deployment.

Read more.

Autonomous Scooter

In the past decade we have seen a tremendous growth of interest in autonomous vehicles. Many companies have been conducting research and testing on the streets such as Waymo, Cruise, and Tesla. These companies tend to focus on traditional passenger vehicles and have achieved good results in structured environments such as highway driving. However, autonomous driving in dense urban areas remains a challenge that has yet to be solved. The goal of this project is to focus on dense urban settings (such as campus) and rather than using a car we have elected to automate an E-Scooter. By simply walking around campus and other parts of San Diego you can see that these electric scooters are littered everywhere. However, these scooters are often only used a couple times per day before being left on the ground at which point they must be picked up by a company representative and placed back in their original locations. Imagine now if instead, the scooter was capable of being summoned to your location via app, driving itself back after dropping you off, and even able to drive itself to charging stations when necessary!

Read more.

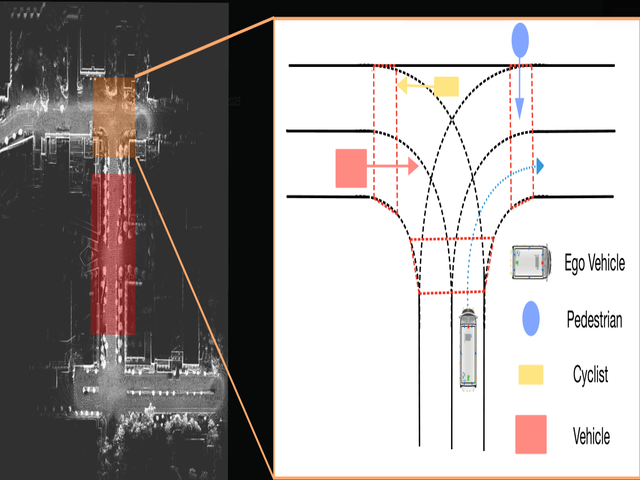

Behavior Modeling and Planning

Motion prediction and planning are typically treated as two separate tasks in the autonomous driving community. Despite significant advancements in motion prediction, integrating these models into safety-critical planning systems remains challenging. In this project, we aim to develop an autonomous driving planning system that optimizes reactive motion prediction and planning objectives jointly.

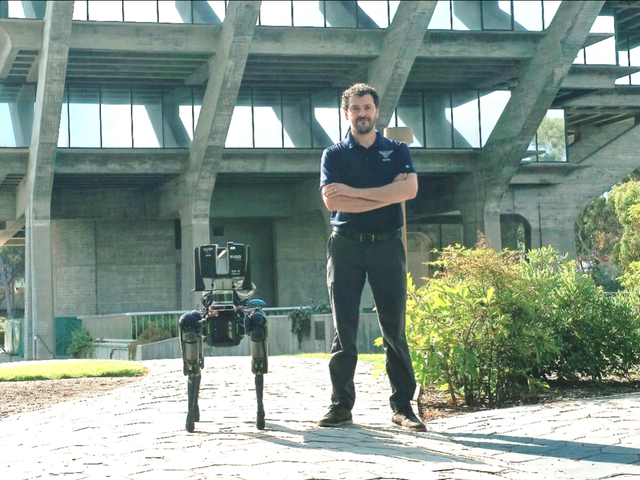

Collaborative Helper Autonomous Shipboard Explore Robot (CHASER)

CHASER project aims to advance autonomous systems by enabling seamless interaction with humans, leveraging fundamental principles of robotics. This initiative seeks to integrate “follow-me” capabilities and artificial intelligence allowing users to command the robot through human-level natural language interactions. The project envisions an AI system akin to a ChatGPT agent embedded within the robot to facilitate intuitive, conversational communication. Building upon a robust autonomous framework, CHASER incorporates functionalities such as mapping and navigation in both indoor and outdoor environments. The overarching goal of this project is to develop a robot that collaborates with humans to accomplish tasks, emphasizing partnership and augmentation rather than human replacement.

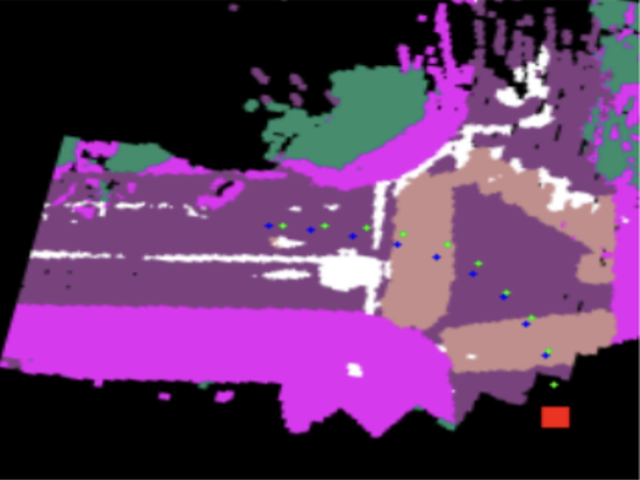

Dynamic Scene Modelling

Autonomous vehicles have tremendous social and economic values for society. But before we can see large-scale deployment, its perception systems need to make significant progress in robustness and generalization capability. To address these challenges, we bring context, the semantic meaning into the robot perception. Our approach is to conduct research on our full-scale testing vehicles, our living laboratory. We evaluate the state-of-the-art algorithms, search for and address the challenges we found in real-world deployment. Such challenges include long term tracking and prediction of road users, dynamic scene modeling and dynamic planning.

Read more.

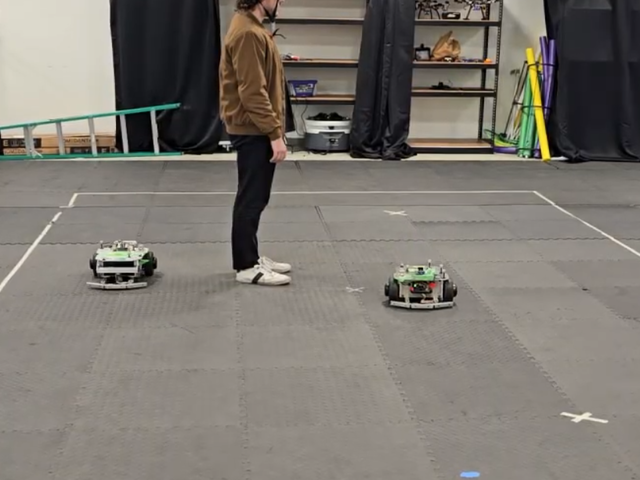

IHI Safe Warehouse Robotics

The overall objective of the project is to design a navigation framework for a fleet of robots which is scalable, robust, and safe. The main component of the control framework to ensure safety is based on a control barrier function approach. Additionally, the project aims to investigate whether a centralized or decentralized approach for control and planning results in safer work conditions.

Mothership - UGV/UAV Coordination for Search and Rescue

The Mothership is a joint UAV-UGV platform that combines the strengths of both systems to address their individual weaknesses. Once on site, identifying and rescuing victims is of the utmost importance and must be done as quickly and safely as possible to maximize survival chances. By leveraging the speed and aerial vantage points of UAVs, we can avoid rubble, navigate around dead ends, and identify victims in a timely manner. This aerial information is transmitted to the UGV via lightweight message formats to assist in path planning and navigation for victim rescue. Current work focuses on compressing high-fidelity features identified by the drone for transmission to the UGV over intermittent network connections, while maximizing usable information for UGV planning. Future work will focus on improving the shared world model built by both platforms for navigation, as well as developing an intuitive intelligent interface for first responders to control fleets of autonomous agents at disaster sites to identify and navigate to victims.

Read more.

Multi-Robot Optimization

Many tasks in the real world require teams composed of multiple robots to work together. For example, in the security domain, multiple robots can continuously monitor a physical perimeter to detect intrusions. Solving multi-robot problems, however, introduces several key challenges, such as learning and acting in the presence of other actors who may introduce added complexity by altering their behaviors. This research area spans a large spectrum from traditional game-theoretic methods, to the application of multi-agent reinforcement learning, to develop solutions to multi-robot tasks such as multi-robot patrolling and pursuit-evasion.

Robust Robot Learning

Our project aims to develop novel reinforcement learning frameworks that enable robots to visually adapt in dynamic robot manipulation and locomotion tasks. By combining advanced computer vision techniques with adaptive reinforcement learning algorithms, we train robots to generalize across diverse visual and physical conditions through techniques like self-supervised representation learning, and domain randomization.

Semantic Perception

A key feature of modern robots operating in human-centric environments is the ability to estimate scene layout, and also associate semantics with scene components, to allow for contextual interaction. Intelligent robots for both in-door and out-door environments, heavily require interpretation of the surrounding to facilitate interaction and planning. We aim to leverage foundational concepts of geometric localization and mapping from classical robotics, along with recent developments in learning-based techniques for retrieving context and scene knowledge, in order to develop smart algorithms for tasks such as semantic scene understanding, mapping, and robot navigation.